Ohsumed is a well known dataset consisting of 34389 documents classified among 23 classes (each document can belong to several classes). The documents use a vocabulary of 30689 words. Dataset split is a random 80/20%. I’ve used the dataset provided at the Alessandro Moschitti’s corpora webpage and more exactly the preprocessed data available on the Rate Adapting Poisson model site. Please note that I have not managed to reproduce the results (for LSI and TF-IDF) from The Rate Adapting Poisson (RAP) model for Information Retrieval and Object Recognition – Peter V. Gehler, Alex D. Holub and Max Welling, ICML 2006. A short email discussion with P. Gehler did not help much to understand the source of the difference. Anyway, the most interesting stuff reside in the difference between the raw data (TF-IDF) and the processed data.

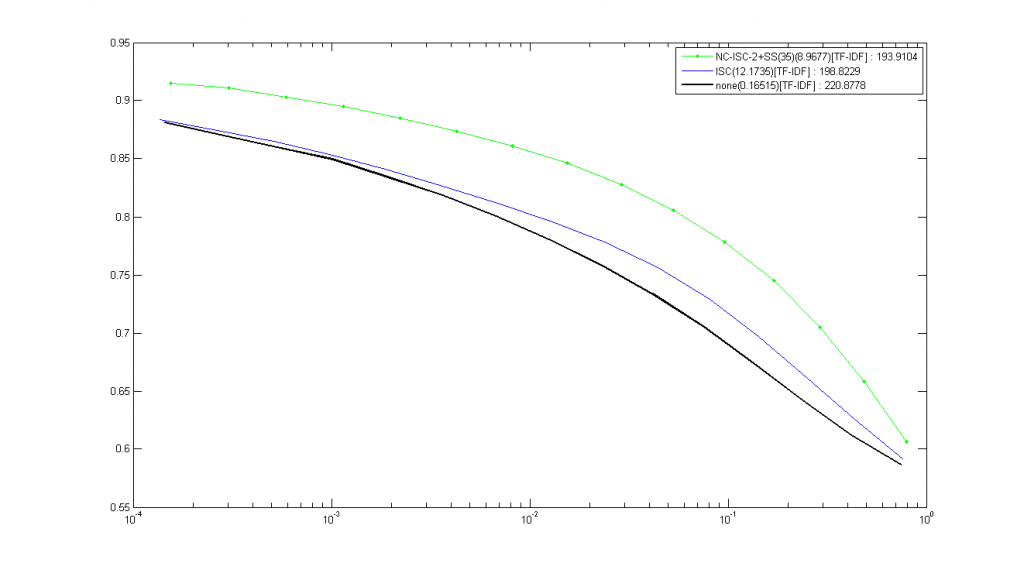

Here are my results :

If you look at the results of the RAP paper, you’ll see that precision of TF-IDF tops at 0.84, and goes down to 0.66, while mine tops at 0.88 and goes down to 0.59. My methodology for counting an « answer » good or false is this : good if at least one class of the candidate is also a class of the query document, bad otherwise. It is either one or zero, no in-between. Comments about this are really welcome.